Content from Overview of Google Cloud for Machine Learning

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- What problem does GCP aim to solve for ML researchers?

- How does using a notebook as a controller help organize ML workflows

in the cloud?

- How does GCP compare to AWS for ML workflows?

Objectives

- Understand the basic role of GCP in supporting ML research.

- Recognize how a notebook can serve as a controller for cloud

resources.

- Compare GCP and AWS approaches to building and managing ML workflows.

Google Cloud Platform (GCP) provides the basic building blocks researchers need to run machine learning (ML) experiments at scale. Instead of working only on your laptop or a high-performance computing (HPC) cluster, you can spin up compute resources on demand, store datasets in the cloud, and run low-cost notebooks that act as a “controller” for larger training and tuning jobs.

This workshop focuses on using a simple notebook environment as the control center for your ML workflow. We will not rely on Google’s fully managed Vertex AI platform, but instead show how to use core GCP services (Compute Engine, storage buckets, and SDKs) so you can build and run experiments from scratch.

Why use GCP for machine learning?

GCP provides several advantages that make it a strong option for applied ML:

-

Flexible compute: You can choose the hardware that fits your workload:

-

CPUs for lightweight models, preprocessing, or

feature engineering.

-

GPUs (e.g., NVIDIA T4, V100, A100) for training

deep learning models.

-

TPUs (Tensor Processing Units) for TensorFlow or

JAX-based deep learning. TPUs are custom Google hardware optimized for

matrix operations and can provide strong performance and energy

efficiency for compatible workloads. Google has reported better

performance-per-watt compared to GPUs in many TensorFlow benchmarks,

though these gains depend heavily on model type and

implementation.

Historically, TPU support has been limited for PyTorch users, and while Google is improving PyTorch integration, the TPU ecosystem still works best for TensorFlow and JAX workflows.

-

CPUs for lightweight models, preprocessing, or

feature engineering.

Data storage and access: Google Cloud Storage (GCS) buckets act like S3 on AWS — an easy way to store and share datasets between experiments and collaborators.

From scratch workflows: Instead of depending on a fully managed ML service, you bring your own frameworks (PyTorch, TensorFlow, scikit-learn, etc.) and run your code the same way you would on your laptop or HPC cluster, but with scalable cloud resources.

Cost visibility: Billing dashboards and project-level budgets make it easier to track costs and stay within research budgets.

Sustainability focus: Google aims to operate entirely on carbon-free energy by 2030. Combined with the TPU’s focus on efficient matrix computation, this gives GCP a potential edge for researchers interested in energy-conscious ML — though real-world energy efficiency varies by workload and utilization.

In short, GCP provides infrastructure that you control from a notebook environment, allowing you to build and run ML workflows just as you would locally, but with access to scalable hardware and storage.

What about AWS?

In many respects, GCP and AWS offer comparable capabilities for ML

research. Both provide scalable compute, storage, and tooling to support

everything from quick experiments to production pipelines.

AWS typically offers a broader range of GPU and CPU instance types,

along with mature managed services like SageMaker and tighter

integration with enterprise infrastructure. GCP, on the other hand,

emphasizes the use of TensorFlow and JAX, and the availability of TPUs —

which may offer energy advantages for certain workloads.

Ultimately, the choice often comes down to framework preference, familiarity, and existing resources, rather than major functional differences between the two platforms.

Comparing infrastructures

Think about your current research setup:

- Do you mostly use your laptop, HPC cluster, AWS, or GCP for ML

experiments?

- Which environment feels most transparent for understanding costs and

reproducibility?

- If you could offload one infrastructure challenge (e.g., installing

GPU drivers, managing storage, or setting up environments), what would

it be and why?

Take 3–5 minutes to discuss with a partner or share in the workshop chat.

- GCP and AWS both provide the essential components for running ML

workloads at scale.

- GCP emphasizes simplicity, open frameworks, and TPU access; AWS

offers broader hardware and automation options.

- TPUs are efficient for TensorFlow and JAX, but GPU-based workflows

(common on AWS) remain more flexible across frameworks.

- Both platforms now provide strong cost tracking and sustainability

tools, with only minor differences in interface and ecosystem

integration.

- Using a notebook as a controller provides reproducibility and helps manage compute and storage resources consistently across clouds.

Content from Data Storage: Setting up GCS

Last updated on 2025-10-30 | Edit this page

Overview

Questions

- How can I store and manage data effectively in GCP for Vertex AI

workflows?

- What are the advantages of Google Cloud Storage (GCS) compared to local or VM storage for machine learning projects?

Objectives

- Explain data storage options in GCP for machine learning

projects.

- Describe the advantages of GCS for large datasets and collaborative

workflows.

- Outline steps to set up a GCS bucket and manage data within Vertex AI.

Machine learning and AI projects rely on data, making efficient storage and management essential. Google Cloud offers several storage options, but the most common for ML workflows are Virtual Machine (VM) disks and Google Cloud Storage (GCS) buckets.

Consult your institution’s IT before handling sensitive data in GCP

As with AWS, do not upload restricted or sensitive data to GCP services unless explicitly approved by your institution’s IT or cloud security team. For regulated datasets (HIPAA, FERPA, proprietary), work with your institution to ensure encryption, restricted access, and compliance with policies.

Options for storage: VM Disks or GCS

What is a VM disk?

A VM disk is the storage volume attached to a Compute Engine VM or a Vertex AI Workbench notebook. It can store datasets and intermediate results, but it is tied to the lifecycle of the VM.

When to store data directly on a VM disk

- Useful for small, temporary datasets processed interactively.

- Data persists if the VM is stopped, but storage costs continue as

long as the disk exists.

- Not ideal for collaboration, scaling, or long-term dataset storage.

Limitations of VM disk storage

-

Scalability: Limited by disk size quota.

-

Sharing: Harder to share across projects or team

members.

- Cost: More expensive per GB compared to GCS for long-term storage.

What is a GCS bucket?

For most ML workflows in GCP, Google Cloud Storage (GCS)

buckets are recommended. A GCS bucket is a container in

Google’s object storage service where you can store an essentially

unlimited number of files. Data in GCS can be accessed from Vertex AI

training jobs, Workbench notebooks, and other GCP services using a

GCS URI (e.g.,

gs://your-bucket-name/your-file.csv).

Benefits of using GCS (recommended for ML workflows)

-

Separation of storage and compute: Data remains

available even if VMs or notebooks are deleted.

-

Easy sharing: Buckets can be accessed by

collaborators with the right IAM roles.

-

Integration with Vertex AI and BigQuery: Read and

write data directly using other GCP tools.

-

Scalability: Handles datasets of any size without

disk limits.

-

Cost efficiency: Lower cost than persistent disks

(VM storage) for long-term storage.

- Data persistence: Durable and highly available across regions.

Recommended approach: GCS buckets

To upload our Titanic dataset to a GCS bucket, we’ll follow these steps:

- Log in to the Google Cloud Console.

- Create a new bucket (or use an existing one).

- Upload your dataset files.

- Use the GCS URI to reference your data in Vertex AI workflows.

1. Sign in to Google Cloud Console

- Go to console.cloud.google.com/welcome?project=doit-rci-mlm25-4626 and log in with your credentials.

3. Create a new bucket

- Click Create bucket.

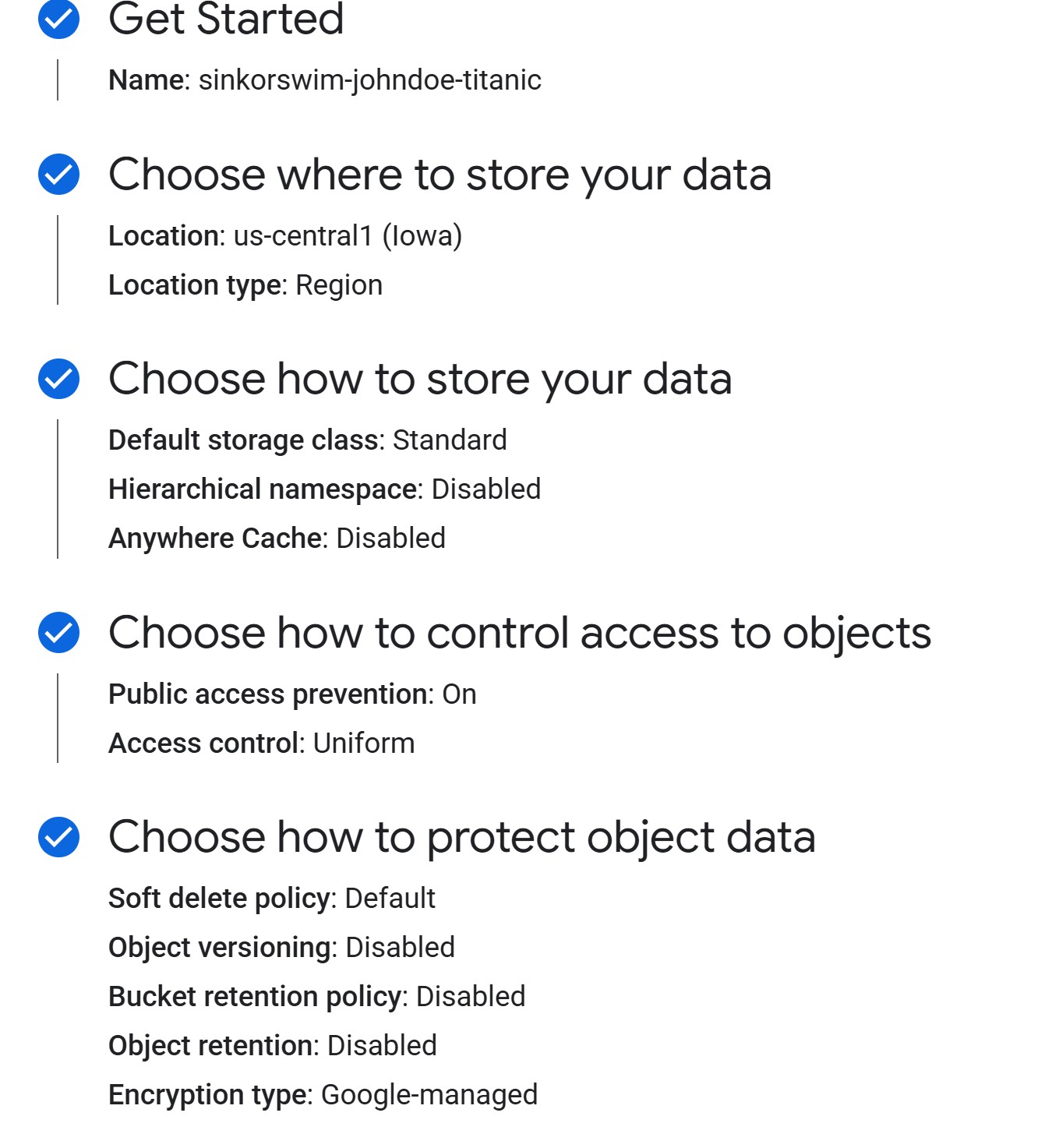

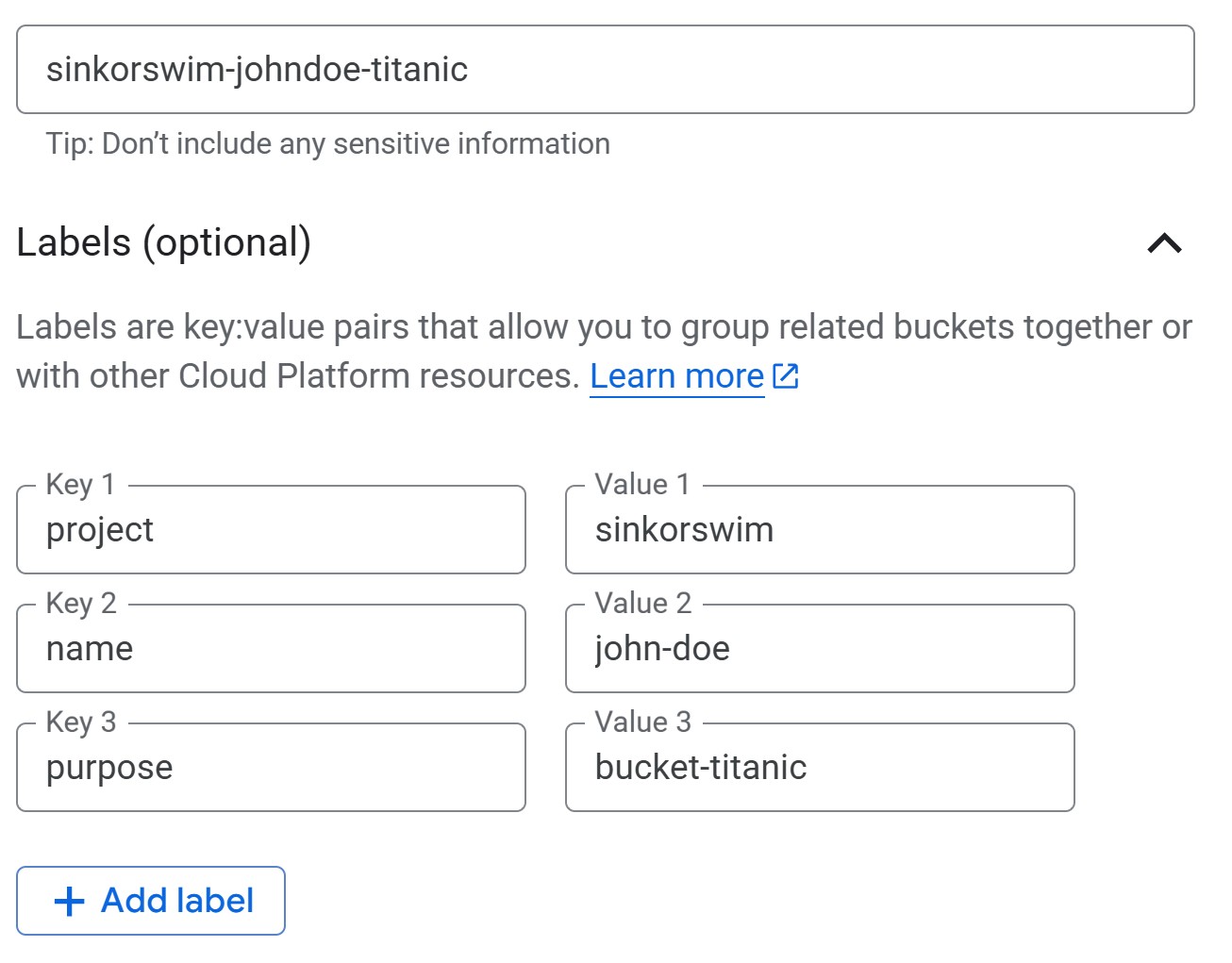

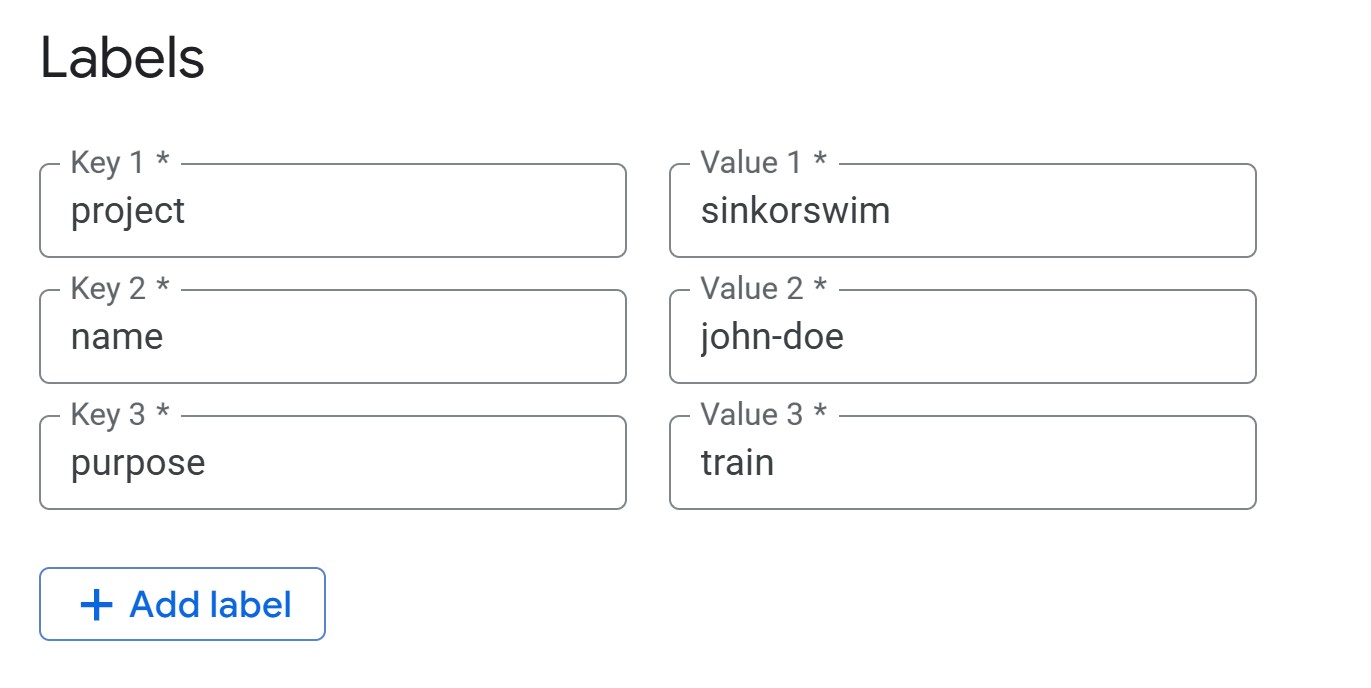

3a. Getting Started (bucket name and tags)

-

Provide a bucket name: Enter a globally unique

name. For this workshop, we can use the following naming convention to

easily locate our buckets:

teamname-firstlastname-dataname(e.g., sinkorswim-johndoe-titanic) -

Add labels (tags) to track costs: Add labels to

track resource usage and billing. If you’re working in a shared account,

this step is mandatory. If not, it’s still recommended to help

you track your own costs!

-

project = teamname(your team’s name) - `name = firstname-lastname’ (your name)

-

purpose=bucket-dataname(include bucket- prefix followed by name of dataset)

-

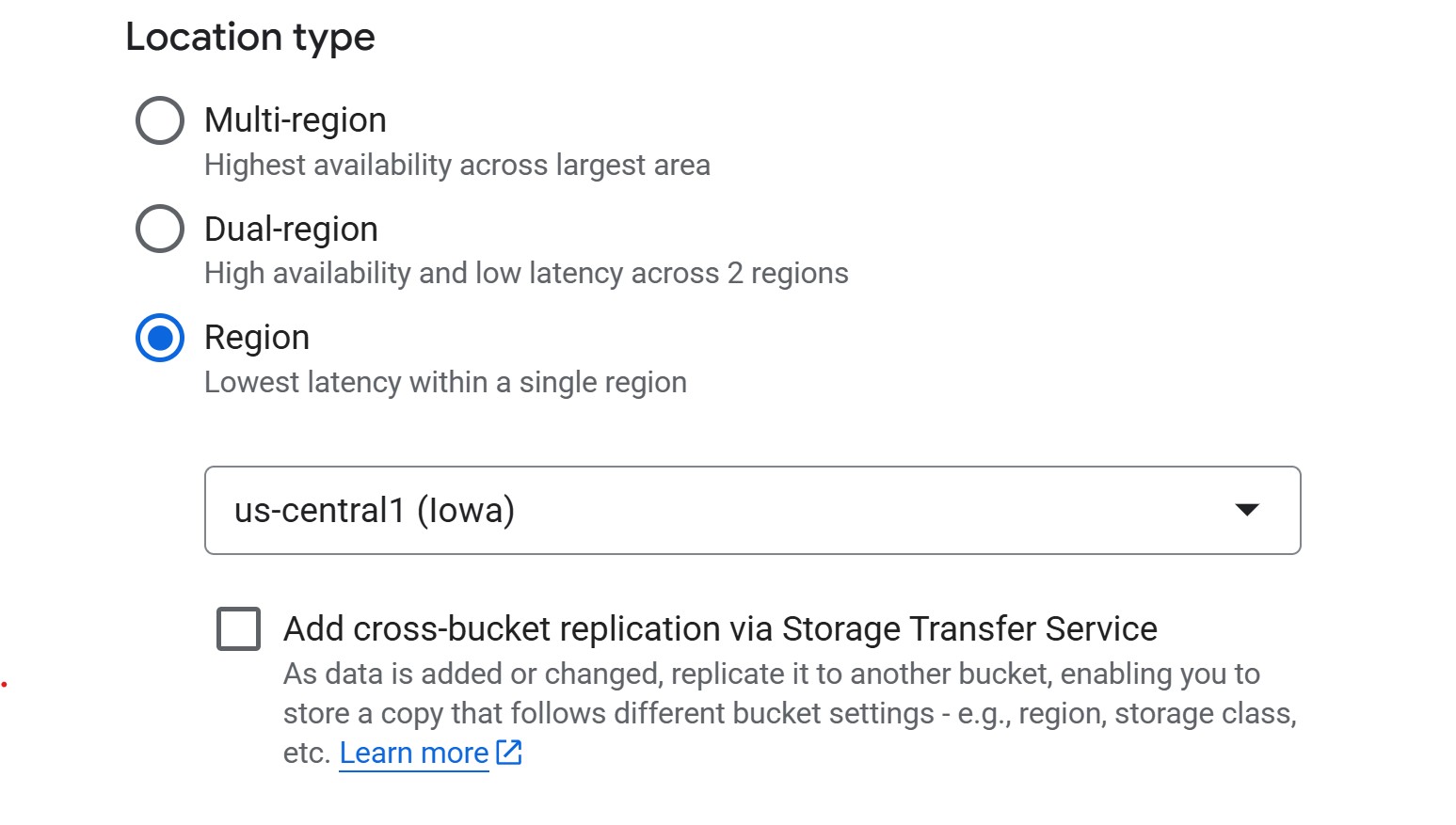

3b. Choose where to store your data

When creating a storage bucket in Google Cloud, the best practice for most machine learning workflows is to use a regional bucket in the same region as your compute resources (for example, us-central1). This setup provides the lowest latency and avoids network egress charges when training jobs read from storage, while also keeping costs predictable. A multi-region bucket, on the other hand, can make sense if your primary goal is broad availability or if collaborators in different regions need reliable access to the same data; the trade-off is higher cost and the possibility of extra egress charges when pulling data into a specific compute region. For most research projects, a regional bucket with the Standard storage class, uniform access control, and public access prevention enabled offers a good balance of performance, security, and affordability.

- Region (cheapest, good default). For instance, us-central1 (Iowa) costs $0.020 per GB-month.

- Multi-region (higher redundancy, more expensive).

3c. Choose how to store your data (storage class)

When creating a bucket, you’ll be asked to choose a storage class, which determines how much you pay for storing data and how often you’re allowed to access it without extra fees.

- Standard – best for active ML/AI workflows. Training data is read and written often, so this is the safest default.

- Nearline / Coldline / Archive – designed for backups or rarely accessed files. These cost less per GB to store, but you pay retrieval fees if you read them during training. Not recommended for most ML projects where data access is frequent.

You may see an option to “Enable hierarchical namespace”. GCP now offers an option to enable a hierarchical namespace for buckets, but this is mainly useful for large-scale analytics pipelines. For most ML workflows, the standard flat namespace is simpler and fully compatible—so it’s best to leave this option off.

3d. Choose how to control access to objects

For ML projects, you should prevent public access so that only authorized users can read or write data. This keeps research datasets private and avoids accidental exposure.

When prompted to choose an access control method, choose uniform access unless you have a very specific reason to manage object-level permissions.

-

Uniform access (recommended): Simplifies management

by enforcing permissions at the bucket level using IAM roles. It’s the

safer and more maintainable choice for teams and becomes permanent after

90 days.

- Fine-grained access: Allows per-file permissions using ACLs, but adds complexity and is rarely needed outside of web hosting or mixed-access datasets.

3e. Choose how to protect object data

GCP automatically protects all stored data, but you can enable additional layers of protection depending on your project’s needs. For most ML or research workflows, you’ll want to balance safety with cost.

- Soft delete policy (recommended for shared projects): Keeps deleted objects recoverable for a short period (default is 7 days). This is useful if team members might accidentally remove data. You can set a custom retention duration, but longer windows increase storage costs.

- Object versioning: Creates new versions of files when they’re modified or overwritten. This can be helpful for tracking model outputs or experiment logs but may quickly increase costs. Enable only if you expect frequent overwrites and need rollback capability.

- Retention policy (for compliance use only): Prevents deletion or modification of objects for a fixed time window. This is typically required for regulated data but should be avoided for active ML projects, since it can block normal cleanup and retraining workflows.

In short: keep the default soft delete unless you have specific compliance requirements. Use object versioning sparingly, and avoid retention locks unless mandated by policy.

4. Upload files to the bucket

- If you haven’t yet, download the data for this workshop (Right-click

→ Save as):

data.zip- Extract the zip folder contents (Right-click → Extract all on Windows; double-click on macOS).

- In the bucket dashboard, click Upload Files.

- Select your Titanic CSVs and upload.

Note the GCS URI for your data After uploading,

click on a file and find its gs:// URI (e.g.,

gs://sinkorswim-johndoe-titanic/titanic_test.csv). This URI

will be used to access the data later.

Adjust bucket permissions

Return to the Google Cloud Console (where we created our bucket and VM) and search for “Cloud Shell Editor”. Open a shell editor and run the below command, *replacing the bucket name with your bucket’s name`:

SH

# Grant read permisssions on the bucket

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectViewer"

# Grant write permisssions on the bucket

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectCreator"

# (Only if you also need overwrite/delete)

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectAdmin"This grants our future VMs permission to read objects from the bucket.

Data transfer & storage costs explained

GCS costs are based on storage class, data transfer, and operations (requests).

-

Standard storage: Data storage cost is based on

region. In

us-central1, the cost is ~$0.02 per GB per month.

-

Uploading data (ingress): Copying data into a GCS

bucket from your laptop, campus HPC, or another provider is free.

-

Downloading data out of GCP (egress): Refers to

data leaving Google’s network to the public internet, such as

downloading files from GCS to your local machine. Typical cost is around

$0.12 per GB to the U.S. and North America, more for other continents.

-

Cross-region access: If your bucket is in one

region and your compute runs in another, you’ll pay an egress fee of

about $0.01–0.02 per GB within North America, higher if crossing

continents.

-

Cross-region access: If your bucket is in one

region and your compute runs in another, you’ll pay an egress fee of

about $0.01–0.02 per GB within North America, higher if crossing

continents.

-

Reading (GET) requests: Each read or list operation

incurs a small API request fee of roughly $0.004 per 10,000 requests.

- Example: a training job that loads 10,000 image samples from GCS

(one per batch) would make about 10,000 GET requests, costing around

$0.004 total. Reading a large file such as a 1 GB CSV or TFRecord shard

counts as a single GET request.

- Example: a training job that loads 10,000 image samples from GCS

(one per batch) would make about 10,000 GET requests, costing around

$0.004 total. Reading a large file such as a 1 GB CSV or TFRecord shard

counts as a single GET request.

-

Writing (PUT/POST/LIST) requests: Uploading,

creating, or modifying objects costs about $0.05 per 10,000 requests.

- Example: saving one model checkpoint file (e.g.,

model-weights.h5ormodel.pt) triggers one PUT request. A training pipeline that saves a few dozen checkpoints or logs would cost well under one cent in request fees.

- Example: saving one model checkpoint file (e.g.,

- Deleting data: Removing objects or buckets does not incur transfer costs. If you download data before deleting, you pay for the egress, but deleting directly in the console or CLI is free. For Nearline, Coldline, or Archive storage classes, deleting before the minimum storage duration (30, 90, or 365 days) triggers an early-deletion fee.

For detailed pricing, see GCS Pricing Information.

Challenge: Estimating Storage Costs

1. Estimate the total cost of storing 1 GB in GCS Standard

storage (us-central1) for one month assuming:

- Storage duration: 1 month

- Dataset retrieved 100 times for model training and tuning

- Data is downloaded once out of GCP at the end of the project

Hints

- Storage cost: $0.02 per GB per month

- Egress (download out of GCP): $0.12 per GB

- GET requests: $0.004 per 10,000 requests (100 requests ≈

free for our purposes)

2. Repeat the above calculation for datasets of 10 GB, 100 GB, and 1 TB (1024 GB).

-

1 GB:

- Storage: 1 GB × $0.02 = $0.02

- Egress: 1 GB × $0.12 = $0.12

- Requests: ~0 (100 reads well below pricing tier)

- Total: $0.14

-

10 GB:

- Storage: 10 GB × $0.02 = $0.20

- Egress: 10 GB × $0.12 = $1.20

- Requests: ~0

- Total: $1.40

-

100 GB:

- Storage: 100 GB × $0.02 = $2.00

- Egress: 100 GB × $0.12 = $12.00

- Requests: ~0

- Total: $14.00

-

1 TB (1024 GB):

- Storage: 1024 GB × $0.02 = $20.48

- Egress: 1024 GB × $0.12 = $122.88

- Requests: ~0

- Total: $143.36

Removing unused data (complete after the workshop)

After you are done using your data, remove unused files/buckets to stop costs:

-

Option 1: Delete files only – if you plan to reuse

the bucket.

- Option 2: Delete the bucket entirely – if you no longer need it.

When does BigQuery come into play?

BigQuery is Google Cloud’s managed data warehouse for storing and analyzing large tabular datasets using SQL. It’s designed for interactive querying and analytics rather than file storage. For most ML workflows—especially smaller projects or those focused on images, text, or modest tabular data—BigQuery isn’t needed. Cloud Storage (GCS) buckets are usually enough: they can store data efficiently and let you stream files directly into your training code without downloading them locally.

BigQuery becomes useful when you’re working with large, structured datasets that multiple team members need to query or explore collaboratively. Instead of reading entire files, you can use SQL to retrieve only the subset of data you need. Teams can share results through saved queries or views and control access at the dataset or table level with IAM. BigQuery also integrates with Vertex AI, allowing structured data stored there to connect directly to training pipelines. The main trade-off is cost: you pay for both storage and the amount of data scanned by queries.

In short, use GCS buckets for storing and streaming files into typical ML workflows, and consider BigQuery when you need a shared, queryable workspace for large tabular datasets.

- Use GCS for scalable, cost-effective, and persistent storage in

GCP.

- Persistent disks are suitable only for small, temporary

datasets.

- Track your storage, transfer, and request costs to manage

expenses.

- Regularly delete unused data or buckets to avoid ongoing costs.

Content from Notebooks as Controllers

Last updated on 2025-10-30 | Edit this page

Overview

Questions

- How do you set up and use Vertex AI Workbench notebooks for machine

learning tasks?

- How can you manage compute resources efficiently using a “controller” notebook approach in GCP?

Objectives

- Describe how to use Vertex AI Workbench notebooks for ML

workflows.

- Set up a Jupyter-based Workbench instance as a controller to manage

compute tasks.

- Use the Vertex AI SDK to launch training and tuning jobs on scalable instances.

Setting up our notebook environment

Google Cloud Workbench provides JupyterLab-based environments that can be used to orchestrate machine learning workflows. In this workshop, we will use a Workbench Instance—the recommended option going forward, as other Workbench environments are being deprecated.

Workbench Instances come with JupyterLab 3 pre-installed and are configured with GPU-enabled ML frameworks (TensorFlow, PyTorch, etc.), making it easy to start experimenting without additional setup. Learn more in the Workbench Instances documentation.

Using the notebook as a controller

The notebook instance functions as a controller to manage

more resource-intensive tasks. By selecting a modest machine type (e.g.,

n1-standard-4), you can perform lightweight operations

locally in the notebook while using the Vertex AI Python

SDK to launch compute-heavy jobs on larger machines (e.g.,

GPU-accelerated) when needed.

This approach minimizes costs while giving you access to scalable infrastructure for demanding tasks like model training, batch prediction, and hyperparameter tuning.

We will follow these steps to create our first Workbench Instance:

1. Navigate to Workbench

- In the Google Cloud Console, search for “Workbench.”

- Click the “Instances” tab (this is the supported path going

forward).

- Pin Workbench to your navigation bar for quick access.

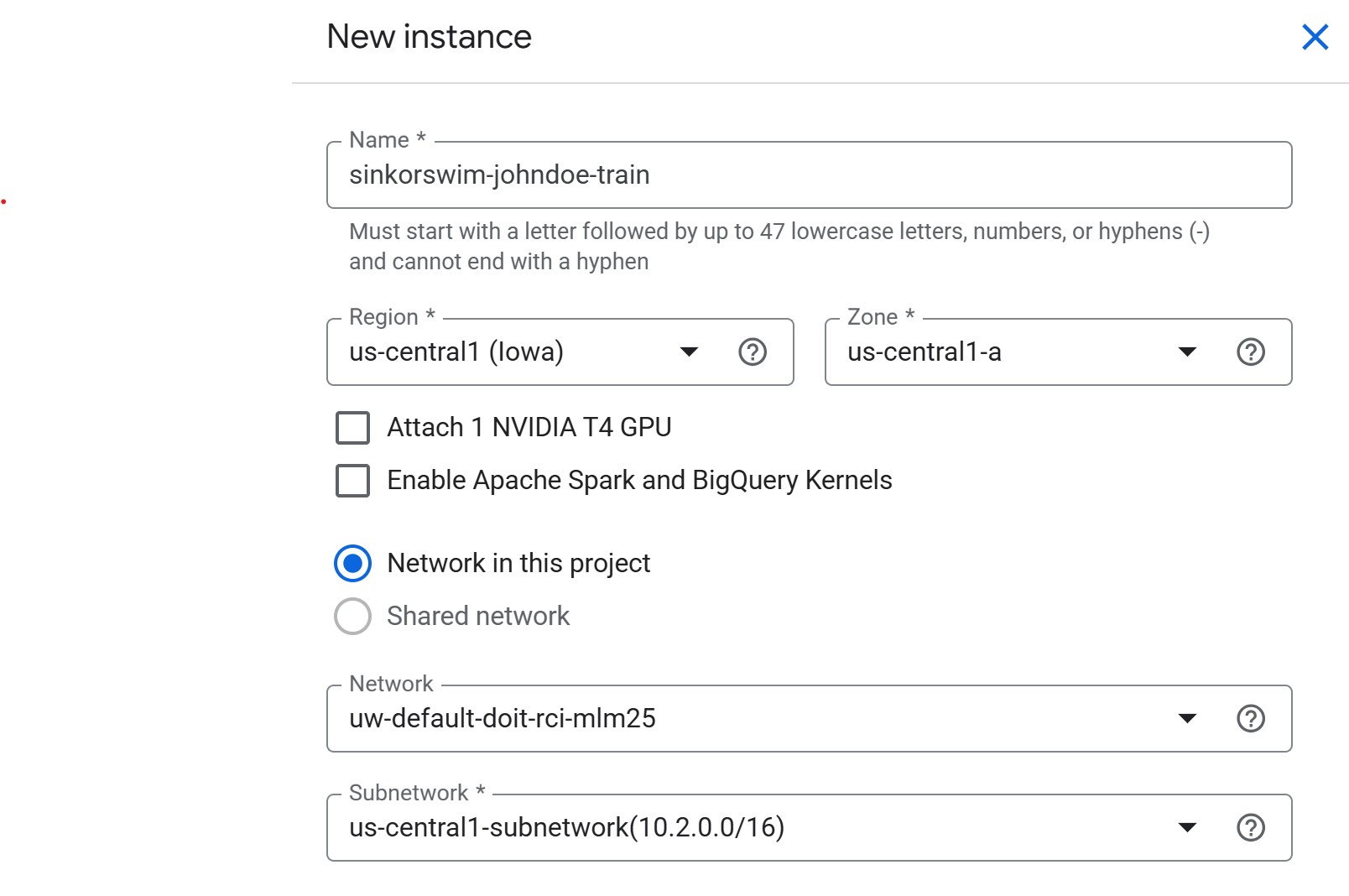

2. Create a new Workbench Instance

Initial settings

- Click Create New near the top of the Workbench page

-

Name: For this workshop, we can use the following

naming convention to easily locate our notebooks:

teamname-yourname-purpose(e.g., sinkorswim-johndoe-train) -

Region: Choose the same region as your storage

bucket (e.g.,

us-central1). This avoids cross-region transfer charges and keeps data access latency low.- If you are unsure, check your bucket’s location in the Cloud Storage console (click the bucket name → look under “Location”).

-

Zone:

us-central1-a(or another zone inus-central1, like-bor-c)- If capacity or GPU availability is limited in one zone, switch to another zone in the same region.

-

NVIDIA T4 GPU: Leave unchecked for now

- We will request GPUs for training jobs separately. Attaching here increases idle costs.

-

Apache Spark and BigQuery Kernels: Leave unchecked

- Enable only if you specifically need Spark or BigQuery notebooks; otherwise, it adds unnecessary images.

-

Network in this project: Required selection

- This option must be selected; shared environments do not allow using

external or default networks.

- This ensures your instance connects to the shared VPC for the workshop.

- This option must be selected; shared environments do not allow using

external or default networks.

-

Network / Subnetwork: Leave as pre-filled.

Advanced settings: Details (tagging)

-

IMPORTANT: Open the “Advanced optoins menu next

-

Labels (required for cost tracking): Under the

Details menu, add the following tags (all lowercase) so that you can

track the total cost of your activity on GCP later:

-

project = teamname(your team’s name) -

name = name(firstname-lastname) -

purpose = train(i.e., the notebook’s overall purpose — train, tune, RAG, etc.)

-

-

Labels (required for cost tracking): Under the

Details menu, add the following tags (all lowercase) so that you can

track the total cost of your activity on GCP later:

Advanced Settings: Environment

While we won’t modify environment settings during this workshop, it’s useful to understand what these options control when creating or editing a Workbench Instance in Vertex AI Workbench.

All Workbench environments use JupyterLab 3 by default, with the

latest NVIDIA GPU drivers, CUDA libraries, and Intel optimizations

preinstalled. You can optionally select JupyterLab 4 (currently in

preview) or provide a custom container image to run your own environment

(for example, a Docker image containing specialized ML frameworks or

dependencies). If needed, you can also specify a post-startup script

stored in Cloud Storage (gs://path/to/script.sh) to

automatically configure the instance (install packages, mount buckets,

etc.) when it boots.

See: Vertex AI Workbench release notes for supported versions and base images.

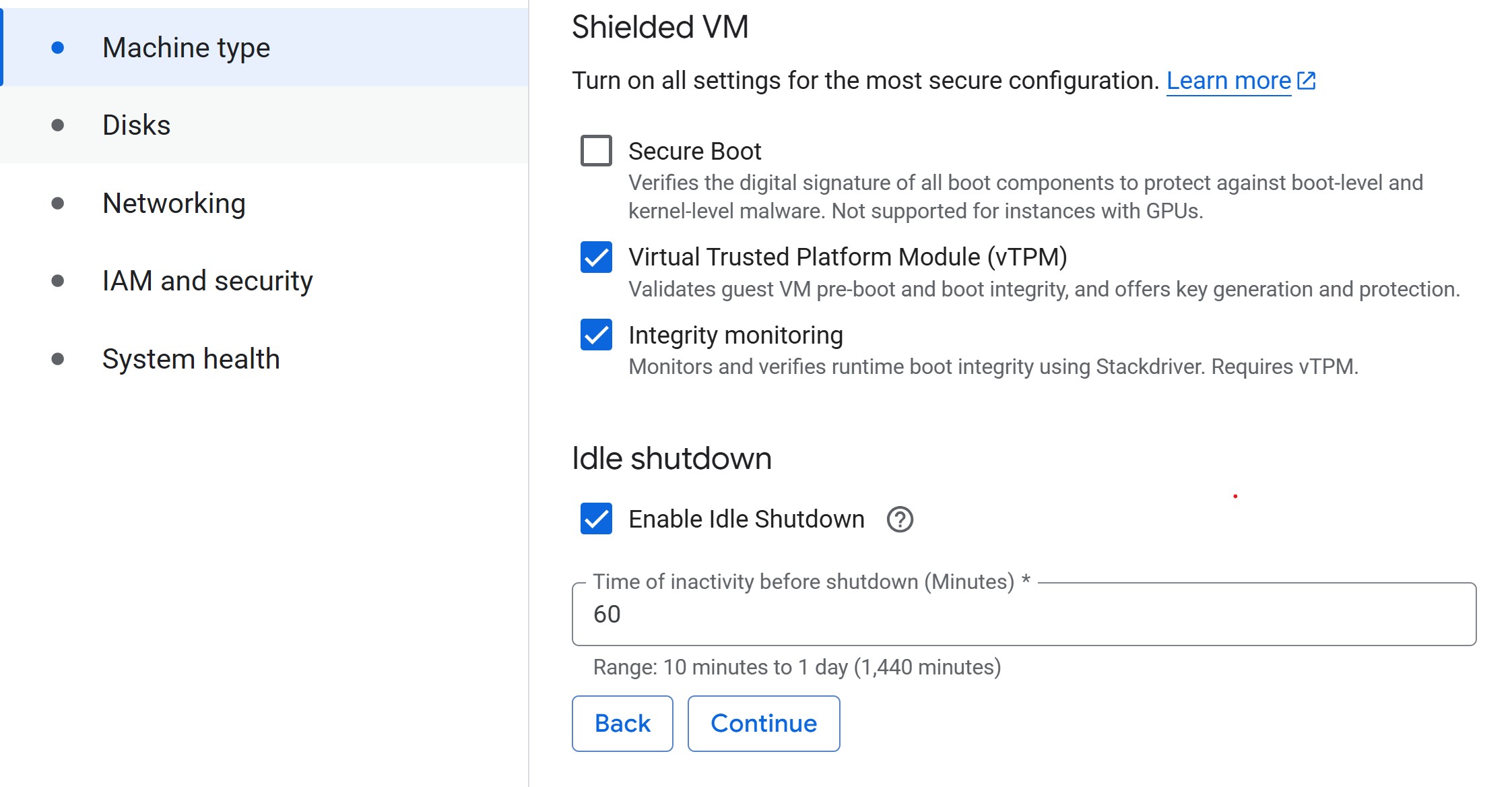

Advanced settings: Machine Type

-

Machine type: Select a small machine (e.g.,

n2-standard-2) to act as the controller.- This keeps costs low while you delegate heavy lifting to training

jobs.

- For guidance on common machine types for ML, refer to Instances for ML on GCP.

- This keeps costs low while you delegate heavy lifting to training

jobs.

- Set idle shutdown: To save on costs when you aren’t doing anything in your notebook, lower the default idle shutdown time to 60 (minutes).

Advanced Settings: Disks

Each Vertex AI Workbench instance uses Persistent Disks (PDs) to store your system files and data. You’ll configure two disks when creating a notebook: a boot disk and a data disk. We’ll leave these at their default settings, but it’s useful to understand the settings for future work.

Boot Disk

Keep this fixed at 100 GB (Balanced PD) — the

default minimum.

It holds the OS, JupyterLab, and ML libraries but not your

datasets.

Estimated cost: about $10 / month (~$0.014 / hr).

You rarely need to resize this, though you can increase to

150–200 GB if you plan to install large environments,

custom CUDA builds, or multiple frameworks.

Data Disk

This is where your datasets, checkpoints, and outputs live.

Use a Balanced PD by default, or an SSD

PD only for high-I/O workloads.

A good rule of thumb is to allocate ≈ 2× your dataset

size, with a minimum of 150 GB and a

maximum of 1 TB.

For example: - 20 GB dataset → 150 GB data disk (minimum)

- 100 GB dataset → 200 GB data disk

- Larger datasets → keep the full dataset in Cloud Storage

(gs://) and copy only subsets locally.

Persistent Disks can be resized anytime without downtime, so it’s best to start small and expand when needed.

Deletion behavior

The ‘Delete to trash’ option is unchecked by

default, which is what you want.

When left unchecked, deleted files are removed immediately, freeing up

disk space right away.

If you check this box, files will move to the system trash instead —

meaning they still take up space (and cost) until you empty it.

Keep this unchecked to avoid paying for deleted files that remain in the trash.

Cost awareness

Persistent Disks are fast but cost more than Cloud Storage.

Typical rates:

- Balanced PD: ~$0.10–$0.12 / GB / month

- SSD PD: ~$0.17–$0.20 / GB / month

- Cloud Storage (Standard): ~$0.026 / GB / month

Rule of thumb: use PDs only for active work; store everything else in Cloud Storage.

Example: a 200 GB dataset costs ~$24/month on a PD but only ~$5/month in Cloud Storage.

Check the latest pricing here:

- Persistent

Disk & Image pricing

- Cloud Storage

pricing

Create notebook

- Click Create to create the instance. Your notebook instance will start in a few minutes. When its status is “Running,” you can open JupyterLab and begin working.

Managing training and tuning with the controller notebook

In the following episodes, we will use the Vertex AI Python

SDK (google-cloud-aiplatform) from this notebook

to submit compute-heavy tasks on more powerful machines. Examples

include:

- Training a model on a GPU-backed instance.

- Running hyperparameter tuning jobs managed by Vertex AI.

This pattern keeps costs low by running your notebook on a modest VM while only incurring charges for larger resources when they are actively in use.

Challenge: Notebook Roles

Your university provides different compute options: laptops, on-prem HPC, and GCP.

- What role does a Workbench Instance notebook play

compared to an HPC login node or a laptop-based JupyterLab?

- Which tasks should stay in the notebook (lightweight control, visualization) versus being launched to larger cloud resources?

The notebook serves as a lightweight control plane.

- Like an HPC login node, it is not meant for heavy computation.

- Suitable for small preprocessing, visualization, and orchestrating

jobs.

- Resource-intensive tasks (training, tuning, batch jobs) should be

submitted to scalable cloud resources (GPU/large VM instances) via the

Vertex AI SDK.

Load pre-filled Jupyter notebooks

Once your newly created instance shows as

Active (green checkmark), click Open

JupyterLab to open the instance in Jupyter Lab. From there, we

can create as many Jupyter notebooks as we would like within the

instance environment.

We will then select the standard python3 environment to start our first .ipynb notebook (Jupyter notebook). We can use this environment since we aren’t doing any training/tuning just yet.

Within the Jupyter notebook, run the following command to clone the lesson repo into our Jupyter environment:

Then, navigate to

/Intro_GCP_for_ML/notebooks/04-Accessing-and-managing-data.ipynb

to begin the first notebook.

- Use a small Workbench Instance notebook as a controller to manage

larger, resource-intensive tasks.

- Always navigate to the “Instances” tab in Workbench, since older

notebook types are deprecated.

- Choose the same region for your Workbench Instance and storage

bucket to avoid extra transfer costs.

- Submit training and tuning jobs to scalable instances using the

Vertex AI SDK.

- Labels help track costs effectively, especially in shared or

multi-project environments.

- Workbench Instances come with JupyterLab 3 and GPU frameworks

preinstalled, making them an easy entry point for ML workflows.

- Enable idle auto-stop to avoid unexpected charges when notebooks are left running.

Content from Accessing and Managing Data in GCS with Vertex AI Notebooks

Last updated on 2025-10-27 | Edit this page

Overview

Questions

- How can I load data from GCS into a Vertex AI Workbench

notebook?

- How do I monitor storage usage and costs for my GCS bucket?

- What steps are involved in pushing new data back to GCS from a notebook?

Objectives

- Read data directly from a GCS bucket into memory in a Vertex AI

notebook.

- Check storage usage and estimate costs for data in a GCS

bucket.

- Upload new files from the Vertex AI environment back to the GCS bucket.

Initial setup

Load pre-filled Jupyter notebooks

If you haven’t opened your newly created VM from the last episode yet, lick Open JupyterLab to open the instance in Jupyter Lab. From there, we can create as many Jupyter notebooks as we would like within the instance environment.

We will then select the standard python3 environment to start our first .ipynb notebook (Jupyter notebook). We can use this environment since we aren’t doing any training/tuning just yet.

Within the Jupyter notebook, run the following command to clone the lesson repo into our Jupyter environment:

Then, navigate to

/Intro_GCP_for_ML/notebooks/04-Accessing-and-managing-data.ipynb

to begin the first notebook.

Reading data from Google Cloud Storage (GCS)

Similar to other cloud vendors, we can either (A) read data directly from Google Cloud Storage (GCS) into memory, or (B) download a copy into your notebook VM. Since we’re using notebooks as controllers rather than training environments, the recommended approach is reading directly from GCS into memory.

A) Reading data directly into memory

PYTHON

import pandas as pd

import io

bucket_name = "sinkorswim-johndoe-titanic" # ADJUST to your bucket's name

bucket = client.bucket(bucket_name)

blob = bucket.blob("titanic_train.csv")

train_data = pd.read_csv(io.BytesIO(blob.download_as_bytes()))

print(train_data.shape)

train_data.head()If you get an error, return to the Google Cloud Console (where we created our bucket and VM) and search for “Cloud Shell Editor”. Open a shell editor and run the below commands, *replacing the bucket name with your bucket’s name`:

SH

# Grant read permisssions on the bucket

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectViewer"

# Grant write permisssions on the bucket

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectCreator"

# (Only if you also need overwrite/delete)

gcloud storage buckets add-iam-policy-binding gs://sinkorswim-johndoe-titanic \

--member="serviceAccount:549047673858-compute@developer.gserviceaccount.com" \

--role="roles/storage.objectAdmin"B) Downloading a local copy

If you prefer, you can download the file from your bucket to the notebook VM’s local disk. This makes repeated reads faster within our notebook environment, but note that each download counts as a “GET” request and may incur a small data transfer (egress) cost if the bucket and VM are in different regions. If both are in the same region, there are no transfer fees — only standard request costs (typically fractions of a cent).

Let’s verify what our path looks like first.

!pwdChecking storage usage of a bucket

Estimating storage costs

PYTHON

storage_price_per_gb = 0.02 # $/GB/month for Standard storage

total_size_gb = total_size_bytes / (1024**3)

monthly_cost = total_size_gb * storage_price_per_gb

print(f"Estimated monthly cost: ${monthly_cost:.4f}")

print(f"Estimated annual cost: ${monthly_cost*12:.4f}")For updated prices, see GCS Pricing.

Writing output files to GCS

Create a sample file on the notebook VM’s storage.

PYTHON

# Create a sample file locally on the notebook VM

file_path = "/home/jupyter/Notes.txt"

with open(file_path, "w") as f:

f.write("This is a test note for GCS.")

!ls /home/jupyterUpload file.

PYTHON

# Point to the right bucket

bucket = client.bucket(bucket_name)

# Create a *Blob* object, which represents a path inside the bucket

# (here it will end up as gs://<bucket_name>/docs/Notes.txt)

blob = bucket.blob("docs/Notes.txt")

# Upload the local file into that blob (object) in GCS

blob.upload_from_filename(file_path)

print("File uploaded successfully.")List bucket contents:

Challenge: Estimating GCS Costs

Suppose you store 50 GB of data in Standard storage

(us-central1) for one month.

- Estimate the monthly storage cost.

- Then estimate the cost if you download (egress) the entire dataset

once at the end of the month.

Hints

- Storage: $0.02 per GB-month

- Egress: $0.12 per GB

- Storage cost: 50 GB × $0.02 = $1.00

- Egress cost: 50 GB × $0.12 = $6.00

- Total cost: $7.00 for one month including one full download

- Load data from GCS into memory to avoid managing local copies when

possible.

- Periodically check storage usage and costs to manage your GCS

budget.

- Use Vertex AI Workbench notebooks to upload analysis results back to GCS, keeping workflows organized and reproducible.

Content from Using a GitHub Personal Access Token (PAT) to Push/Pull from a Vertex AI Notebook

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- How can I securely push/pull code to and from GitHub within a Vertex

AI Workbench notebook?

- What steps are necessary to set up a GitHub PAT for authentication

in GCP?

- How can I convert notebooks to

.pyfiles and ignore.ipynbfiles in version control?

Objectives

- Configure Git in a Vertex AI Workbench notebook to use a GitHub

Personal Access Token (PAT) for HTTPS-based authentication.

- Securely handle credentials in a notebook environment using

getpass.

- Convert

.ipynbfiles to.pyfiles for better version control practices in collaborative projects.

Step 0: Initial setup

In the previous episode, we cloned our forked repository as part of the workshop setup. In this episode, we’ll see how to push our code to this fork. Complete these three setup steps before moving forward.

Clone the fork if you haven’t already. See previous episode.

Start a new Jupyter notebook, and name it something like

Interacting-with-git.ipynb. We can use the default Python 3 kernel in Vertex AI Workbench.Change directory to the workspace where your repository is located. In Vertex AI Workbench, notebooks usually live under

/home/jupyter/.

Step 1: Using a GitHub personal access token (PAT) to push/pull from a Vertex AI notebook

When working in Vertex AI Workbench notebooks, you may often need to push code updates to GitHub repositories. Since Workbench VMs may be stopped and restarted, configurations like SSH keys may not persist. HTTPS-based authentication with a GitHub Personal Access Token (PAT) is a practical solution. PATs provide flexibility for authentication and enable seamless interaction with both public and private repositories directly from your notebook.

Important Note: Personal access tokens are powerful credentials. Select the minimum necessary permissions and handle the token carefully.

Generate a personal access token (PAT) on GitHub

- Go to Settings in GitHub.

- Click Developer settings at the bottom of the left

sidebar.

- Select Personal access tokens, then click

Tokens (classic).

- Click Generate new token (classic).

- Give your token a descriptive name and set an expiration date if

desired.

-

Select minimum permissions:

- Public repos:

public_repo

- Private repos:

repo

- Public repos:

- Click Generate token and copy it immediately—you won’t be able to see it again.

Caution: Treat your PAT like a password. Don’t share it or expose it in your code. Use a password manager to store it.

Step 2: Configure Git settings

PYTHON

!git config --global user.name "Your Name"

!git config --global user.email your_email@wisc.edu-

user.name: Will appear in the commit history.

-

user.email: Must match your GitHub account so commits are linked to your profile.

Step 3: Convert .ipynb notebooks to

.py

Tracking .py files instead of .ipynb helps

with cleaner version control. Notebooks store outputs and metadata,

which makes diffs noisy. .py files are lighter and easier

to review.

- Install Jupytext.

- Convert a notebook to

.py.

- Convert all notebooks in the current directory.

Step 4: Add and commit .py files

Step 5: Add .ipynb to .gitignore

PYTHON

!touch .gitignore

with open(".gitignore", "a") as gitignore:

gitignore.write("\n# Ignore Jupyter notebooks\n*.ipynb\n")

!cat .gitignoreAdd other temporary files too:

PYTHON

with open(".gitignore", "a") as gitignore:

gitignore.write("\n# Ignore cache and temp files\n__pycache__/\n*.tmp\n*.log\n")Commit the .gitignore:

Step 6: Syncing with GitHub

First, pull the latest changes:

If conflicts occur, resolve manually before committing.

Then push with your PAT credentials:

Step 7: Convert .py back to notebooks (optional)

To convert .py files back to .ipynb after

pulling updates:

Challenge: GitHub PAT Workflow

- Why might you prefer using a PAT with HTTPS instead of SSH keys in

Vertex AI Workbench?

- What are the benefits of converting

.ipynbfiles to.pybefore committing to a shared repo?

- PATs with HTTPS are easier to set up in temporary environments where

SSH configs don’t persist.

- Converting notebooks to

.pyresults in cleaner diffs, easier code review, and smaller repos without stored outputs/metadata.

- Use a GitHub PAT for HTTPS-based authentication in Vertex AI

Workbench notebooks.

- Securely enter sensitive information in notebooks using

getpass.

- Converting

.ipynbfiles to.pyfiles helps with cleaner version control.

- Adding

.ipynbfiles to.gitignorekeeps your repository organized.

Content from Training Models in Vertex AI: Intro

Last updated on 2025-10-30 | Edit this page

Overview

Questions

- What are the differences between training locally in a Vertex AI

notebook and using Vertex AI-managed training jobs?

- How do custom training jobs in Vertex AI streamline the training

process for various frameworks?

- How does Vertex AI handle scaling across CPUs, GPUs, and TPUs?

Objectives

- Understand the difference between local training in a Vertex AI

Workbench notebook and submitting managed training jobs.

- Learn to configure and use Vertex AI custom training jobs for

different frameworks (e.g., XGBoost, PyTorch, SKLearn).

- Understand scaling options in Vertex AI, including when to use CPUs,

GPUs, or TPUs.

- Compare performance, cost, and setup between custom scripts and

pre-built containers in Vertex AI.

- Conduct training with data stored in GCS and monitor training job status using the Google Cloud Console.

Initial setup

1. Open pre-filled notebook

Navigate to

/Intro_GCP_for_ML/notebooks/06-Training-models-in-VertexAI.ipynb

to begin this notebook.

2. CD to instance home directory

To ensure we’re all in the saming starting spot, change directory to your Jupyter home directory.

3. Set environment variables

This code initializes the Vertex AI environment by importing the Python SDK, setting the project, region, and defining a GCS bucket for input/output data.

-

PROJECT_ID: Identifies your GCP project.

-

REGION: Determines where training jobs run (choose a region close to your data).

PYTHON

from google.cloud import storage

client = storage.Client()

PROJECT_ID = client.project

REGION = "us-central1"

BUCKET_NAME = "sinkorswim-johndoe-titanic" # ADJUST to your bucket's name

LAST_NAME = "DOE" # ADJUST to your last name or name

print(f"project = {PROJECT_ID}\nregion = {REGION}\nbucket = {BUCKET_NAME}")Testing train.py locally in the notebook

Understanding the XGBoost Training Script (GCP version)

Take a moment to review the train_xgboost.py script

we’re using on GCP found in

Intro_GCP-for_ML/scripts/train_xgboost.py. This script

handles preprocessing, training, and saving an XGBoost model, while

supporting local paths and GCS (gs://) paths, and it adapts

to Vertex AI conventions (e.g., AIP_MODEL_DIR).

Try answering the following questions:

- Data preprocessing: What transformations are applied to the dataset before training?

-

Training function: What does the

train_model()function do? Why print the training time? -

Command-line arguments: What is the purpose of

argparsein this script? How would you change the number of training rounds? - Handling local vs. GCP runs: How does the script let you run the same code locally, in Workbench, or as a Vertex AI job? Which environment variable controls where the model artifact is written?

-

Training and saving the model: What format is the

dataset converted to before training, and why? How does the script save

to a local path vs. a

gs://destination?

After reviewing, discuss any questions or observations with your group.

-

Data preprocessing: The script fills missing values

(

Agewith median,Embarkedwith mode), maps categorical fields to numeric (Sex→ {male:1, female:0},Embarked→ {S:0, C:1, Q:2}), and drops non-predictive columns (Name,Ticket,Cabin). -

Training function:

train_model()constructs and fits an XGBoost model with the provided parameters and prints wall-clock training time. Timing helps compare runs and make sensible scaling choices. -

Command-line arguments:

argparselets you set hyperparameters and file paths without editing code (e.g.,--max_depth,--eta,--num_round,--train). To change rounds:python train_xgboost.py --num_round 200 -

Handling local vs. GCP runs:

-

Input: You pass

--trainas either a local path (train.csv) or a GCS URI (gs://bucket/path.csv). The script automatically detectsgs://and reads the file directly from Cloud Storage using the Python client.

-

Output: If the environment variable

AIP_MODEL_DIRis set (as it is in Vertex AI CustomJobs), the trained model is written there—often ags://path. Otherwise, the model is saved in the current working directory, which works seamlessly in both local and Workbench environments.

-

Input: You pass

-

Training and saving the model:

The training data is converted into an XGBoostDMatrix, an optimized format that speeds up training and reduces memory use. The trained model is serialized withjoblib. When saving locally, the file is written directly to disk. If saving to a Cloud Storage path (gs://...), the model is first saved to a temporary file and then uploaded to the specified bucket.

Before scaling training jobs onto managed resources, it’s essential to test your training script locally. This prevents wasting GPU/TPU time on bugs or misconfigured code.

Guidelines for testing ML pipelines before scaling

-

Run tests locally first with small datasets.

-

Use a subset of your dataset (1–5%) for fast

checks.

-

Start with minimal compute before moving to larger

accelerators.

-

Log key metrics such as loss curves and

runtimes.

- Verify correctness first before scaling up.

What tests should we do before scaling?

Before scaling to multiple or more powerful instances (e.g., GPUs or TPUs), it’s important to run a few sanity checks. Skipping these can lead to: silent data bugs, runtime blowups at scale, inefficient experiments, or broken model artifacts.

Here is a non-exhaustive list of suggested tests to perform before scaling up your compute needs.

- Reproducibility - do you get the same result each time you run your code? If not, set seeds controlling randomness.

-

Data loads correctly – dataset loads without

errors, expected columns exist, missing values handled.

-

Overfitting check – train on a tiny dataset (e.g.,

100 rows). If it doesn’t overfit, something is off.

-

Loss behavior – verify training loss decreases and

doesn’t diverge.

-

Runtime estimate – get a rough sense of training

time on small data.

-

Memory estimate – check approximate memory

use.

- Save & reload – ensure model saves, reloads, and infers without errors.

Download data into notebook environment

Sometimes it’s helpful to keep a copy of data in your notebook VM for quick iteration, even though GCS is the preferred storage location.

Local test run of train.py

Outside of this workshop, you should run these kinds of tests on your local laptop or lab PC when possible. We’re using the Workbench VM here only for convenience in this workshop setting, but this does incur a small fee for our running VM.

- For large datasets, use a small representative sample of the total dataset when testing locally (i.e., just to verify that code is working and model overfits nearly perfectly after training enough epochs)

- For larger models, use smaller model equivalents (e.g., 100M vs 7B params) when testing locally

PYTHON

# Training configuration parameters for XGBoost

MAX_DEPTH = 3 # maximum depth of each decision tree (controls model complexity)

ETA = 0.1 # learning rate (how much each tree contributes to the overall model)

SUBSAMPLE = 0.8 # fraction of training samples used per boosting round (prevents overfitting)

COLSAMPLE = 0.8 # fraction of features (columns) sampled per tree (adds randomness and diversity)

NUM_ROUND = 100 # number of boosting iterations (trees) to train

import time as t

start = t.time()

# Run the custom training script with hyperparameters defined above

!python Intro_GCP_for_ML/scripts/train_xgboost.py \

--max_depth $MAX_DEPTH \

--eta $ETA \

--subsample $SUBSAMPLE \

--colsample_bytree $COLSAMPLE \

--num_round $NUM_ROUND \

--train titanic_train.csv

print(f"Total local runtime: {t.time() - start:.2f} seconds")Training on this small dataset should take <1 minute. Log runtime as a baseline. You should see the following output file:

-

xgboost-model# Python-serialized XGBoost model (Booster) via joblib; load with joblib.load for reuse.

Evaluate the trained model on validation data

Now that we’ve trained and saved an XGBoost model, we want to do the

most important sanity check:

Does this model make reasonable predictions on unseen

data?

This step: 1. Loads the serialized model artifact that was written by

train_xgboost.py 2. Loads a test set of Titanic passenger

data 3. Applies the same preprocessing as training 4. Generates

predictions 5. Computes simple accuracy

First, we’ll download the test data

PYTHON

blob = bucket.blob("titanic_test.csv")

blob.download_to_filename("titanic_test.csv")

print("Downloaded titanic_test.csv")Then, we apply the same preprocessing function used by our training script before applying the model to our data.

PYTHON

import pandas as pd

import xgboost as xgb

import joblib

from sklearn.metrics import accuracy_score

from Intro_GCP_for_ML.scripts.train_xgboost import preprocess_data # reuse same preprocessing

# Load test data

test_df = pd.read_csv("titanic_test.csv")

# Apply same preprocessing from training

X_test, y_test = preprocess_data(test_df)

# Load trained model from local file

model = joblib.load("xgboost-model")

# Predict on test data

dtest = xgb.DMatrix(X_test)

y_pred = model.predict(dtest)

y_pred_binary = (y_pred > 0.5).astype(int)

# Compute accuracy

acc = accuracy_score(y_test, y_pred_binary)

print(f"Test accuracy: {acc:.3f}")Training via Vertex AI custom training job

Unlike “local” training using our notebook’s VM, this next approach launches a managed training job that runs on scalable compute. Vertex AI handles provisioning, scaling, logging, and saving outputs to GCS.

Which machine type to start with?

Start with a small CPU machine like n1-standard-4. Only

scale up to GPUs/TPUs once you’ve verified your script. See Instances

for ML on GCP for guidance.

Creating a custom training job with the SDK

We’ll first initialize the Vertex AI platform with our environment

variables. We’ll also set a RUN_ID and

ARTIFACT_DIR to help store outputs.

PYTHON

from google.cloud import aiplatform

import datetime as dt

RUN_ID = dt.datetime.now().strftime("%Y%m%d-%H%M%S")

ARTIFACT_DIR = f"gs://{BUCKET_NAME}/artifacts/xgb/{RUN_ID}/" # everything will live beside this

print(f"project = {PROJECT_ID}\nregion = {REGION}\nbucket = {BUCKET_NAME}\nartifact_dir = {ARTIFACT_DIR}")

# Staging bucket is only for the SDK's temp code tarball (aiplatform-*.tar.gz)

aiplatform.init(project=PROJECT_ID, location=REGION, staging_bucket=f"gs://{BUCKET_NAME}/.vertex_staging")This next section defines a custom training job in Vertex AI,

specifying how and where the training code will run. It points to your

training script (train_xgboost.py), uses Google’s prebuilt

XGBoost training container image, and installs any extra dependencies

your script needs (in this case, google-cloud-storage for

accessing GCS). The display_name sets a readable name for

tracking the job in the Vertex AI console.

Prebuilt containers for training

Vertex AI provides prebuilt Docker container images for model training. These containers are organized by machine learning frameworks and framework versions and include common dependencies that you might want to use in your training code. To learn more about prebuilt training containers, see Prebuilt containers for custom training.

PYTHON

job = aiplatform.CustomTrainingJob(

display_name=f"{LAST_NAME}_xgb_{RUN_ID}",

script_path="Intro_GCP_for_ML/scripts/train_xgboost.py",

container_uri="us-docker.pkg.dev/vertex-ai/training/xgboost-cpu.2-1:latest",

)Finally, this next block launches the custom training job on Vertex

AI using the configuration defined earlier. **We won’t be charged for

our selected MACHINE until we run the below code using

job.run(). This marks the point when our script actually

begins executing remotely on the Vertex training infrastructure. Once

job.run() is called, Vertex handles packaging your training script,

transferring it to the managed training environment, provisioning the

requested compute instance, and monitoring the run. The job’s status and

logs can be viewed directly in the Vertex AI Console under Training →

Custom jobs.

If you need to cancel or modify a job mid-run, you can do so from the console or via the SDK by calling job.cancel(). When the job completes, Vertex automatically tears down the compute resources so you only pay for the active training time.

- The

argslist passes command-line parameters directly into your training script, including hyperparameters and the path to the training data in GCS.

-

base_output_dirspecifies where all outputs (model, metrics, logs) will be written in Cloud Storage -

machine_typecontrols the compute resources used for training. - When

sync=True, the notebook waits until the job finishes before continuing, making it easier to inspect results immediately after training.

PYTHON

job.run(

args=[

f"--train=gs://{BUCKET_NAME}/titanic_train.csv",

f"--max_depth={MAX_DEPTH}",

f"--eta={ETA}",

f"--subsample={SUBSAMPLE}",

f"--colsample_bytree={COLSAMPLE}",

f"--num_round={NUM_ROUND}",

],

replica_count=1,

machine_type=MACHINE, # MACHINE variable defined above; adjust to something more powerful when needed

base_output_dir=ARTIFACT_DIR, # sets AIP_MODEL_DIR for your script

sync=True,

)

print("Model + logs folder:", ARTIFACT_DIR)This launches a managed training job with Vertex AI. It should take 2-5 minutes for the training job to complete.

Understanding the training output message

After your job finishes, you may see a message like:

Training did not produce a Managed Model returning None.

This is expected when running a CustomTrainingJob without

specifying deployment parameters. Vertex AI supports two modes:

-

CustomTrainingJob (research/development) – You

control training and save models/logs to Cloud Storage via

AIP_MODEL_DIR. This is ideal for experimentation and cost control. -

TrainingPipeline (for deployment) – You include

model_serving_container_image_uriandmodel_display_name, and Vertex automatically registers a Managed Model in the Model Registry for deployment to an endpoint.

In our setup, we’re intentionally using the simpler

CustomTrainingJob path. Your trained model is safely

stored under your specified artifact directory (e.g.,

gs://{BUCKET_NAME}/artifacts/xgb/{RUN_ID}/), and you can

later register or deploy it manually when ready.

Monitoring training jobs in the Console

- Go to the Google Cloud Console.

- Navigate to Vertex AI > Training > Custom

Jobs.

- Click on your job name to see status, logs, and output model

artifacts.

- Cancel jobs from the console if needed (be careful not to stop jobs you don’t own in shared projects).

Training artifacts

After the training run completes, we can manually view our bucket using the Google Cloud Console or run the below code.

PYTHON

total_size_bytes = 0

# bucket = client.bucket(BUCKET_NAME)

for blob in client.list_blobs(BUCKET_NAME):

total_size_bytes += blob.size

print(blob.name)

total_size_mb = total_size_bytes / (1024**2)

print(f"Total size of bucket '{BUCKET_NAME}': {total_size_mb:.2f} MB")Training Artifacts → ARTIFACT_DIR

This is your intended output location, set via

base_output_dir.

It contains everything your training script explicitly writes. In our

case, this includes:

-

{BUCKET_NAME}/artifacts/xgb/{RUN_ID}/xgboost-model— Serialized XGBoost model (Booster) saved viajoblib; reload later withjoblib.load()for reuse or deployment.

Evaluated the trained model stored on GCS

import io

# Load test data directly into memory

bucket = client.bucket(BUCKET_NAME)

blob = bucket.blob("titanic_test.csv")

test_df = pd.read_csv(io.BytesIO(blob.download_as_bytes()))

# Apply same preprocessing logic used during training

X_test, y_test = preprocess_data(test_df)

# -------------------------

# 4. Load the model artifact we just pulled from GCS

# -------------------------

MODEL_BLOB_PATH = f"artifacts/xgb/{RUN_ID}/model/xgboost-model"

model_blob = bucket.blob(MODEL_BLOB_PATH)

model_bytes = model_blob.download_as_bytes()

model = joblib.load(io.BytesIO(model_bytes))

# -------------------------

# 5. Run predictions and compute accuracy

# -------------------------

dtest = xgb.DMatrix(X_test)

y_pred_prob = model.predict(dtest)

y_pred = (y_pred_prob >= 0.5).astype(int)

acc = accuracy_score(y_test, y_pred)

print(f"Test accuracy (model from Vertex job): {acc:.3f}")When training takes too long

Two main options in Vertex AI:

Option 1: Upgrade to more powerful machine

types

- The simplest way to reduce training time is to use a larger machine or

add GPUs (e.g., T4, V100, A100).

- This works best for small or medium datasets (<10 GB) and avoids

the coordination overhead of distributed training.

- GPUs and TPUs can accelerate deep learning workloads

significantly.

Option 2: Use distributed training with multiple

replicas

- Vertex AI supports distributed training for many frameworks.

- The dataset is split across replicas, each training a portion of the

data with synchronized gradient updates.

- This approach is most useful for large datasets and long-running

jobs.

When distributed training makes sense

- Dataset size exceeds 10–50 GB.

- Training on a single machine takes more than 10 hours.

- The model is a deep learning workload that scales naturally across

GPUs or TPUs.

We will explore both options more in depth in the next episode when we train a neural network.

-

Environment initialization: Use

aiplatform.init()to set defaults for project, region, and bucket.

-

Local vs managed training: Test locally before

scaling into managed jobs.

-

Custom jobs: Vertex AI lets you run scripts as

managed training jobs using pre-built or custom containers.

-

Scaling: Start small, then scale up to GPUs or

distributed jobs as dataset/model size grows.

- Monitoring: Track job logs and artifacts in the Vertex AI Console.

Content from Training Models in Vertex AI: PyTorch Example

Last updated on 2025-10-30 | Edit this page

Overview

Questions

- When should you consider a GPU (or TPU) instance for PyTorch training in Vertex AI, and what are the trade‑offs for small vs. large workloads?

- How do you launch a script‑based training job and write all artifacts (model, metrics, logs) next to each other in GCS without deploying a managed model?

Objectives

- Prepare the Titanic dataset and save train/val arrays to compressed

.npzfiles in GCS. - Submit a CustomTrainingJob that runs a PyTorch script and

explicitly writes outputs to a chosen

gs://…/artifacts/.../folder. - Co‑locate artifacts:

model.pt(or.joblib),metrics.json,eval_history.csv, andtraining.logfor reproducibility. - Choose CPU vs. GPU instances sensibly; understand when distributed training is (not) worth it.

Initial setup

1. Open pre-filled notebook

Navigate to

/Intro_GCP_for_ML/notebooks/06-Training-models-in-VertexAI-GPUs.ipynb

to begin this notebook. Select the PyTorch environment (kernel)

Local PyTorch is only needed for local tests. Your Vertex AI

job uses the container specified by container_uri

(e.g., pytorch-cpu.2-1 or pytorch-gpu.2-1), so

it brings its own framework at run time.

2. CD to instance home directory

To ensure we’re all in the saming starting spot, change directory to your Jupyter home directory.

3. Set environment variables

This code initializes the Vertex AI environment by importing the Python SDK, setting the project, region, and defining a GCS bucket for input/output data.

PYTHON

from google.cloud import aiplatform, storage

client = storage.Client()

PROJECT_ID = client.project

REGION = "us-central1"

BUCKET_NAME = "sinkorswim-johndoe-titanic" # ADJUST to your bucket's name

LAST_NAME = 'DOE' # ADJUST to your last name. Since we're in a shared account envirnoment, this will help us track down jobs in the Console

print(f"project = {PROJECT_ID}\nregion = {REGION}\nbucket = {BUCKET_NAME}")

# initializes the Vertex AI environment with the correct project and location. Staging bucket is used for storing the compressed software that's packaged for training/tuning jobs.

aiplatform.init(project=PROJECT_ID, location=REGION, staging_bucket=f"gs://{BUCKET_NAME}/.vertex_staging") # store tar balls in staging folder Prepare data as .npz

Why .npz? NumPy’s .npz files are compressed

binary containers that can store multiple arrays (e.g., features and

labels) together in a single file. They offer numerous benefits:

- Smaller, faster I/O than CSV for arrays.

- One file can hold multiple arrays (

X_train,y_train). - Natural fit for

torch.utils.data.Dataset/DataLoader.

-

Cloud-friendly: compressed

.npzfiles reduce upload and download times and minimize GCS egress costs. Because each.npzis a single binary object, reading it from Google Cloud Storage (GCS) requires only one network call—much faster and cheaper than streaming many small CSVs or images individually.

-

Efficient data movement: when you launch a Vertex

AI training job, GCS objects referenced in your script (for example,

gs://.../train_data.npz) are automatically staged to the job’s VM or container at runtime. Vertex copies these objects into its local scratch disk before execution, so subsequent reads (e.g.,np.load(...)) occur from local storage rather than directly over the network. For small-to-medium datasets, this happens transparently and incurs minimal startup delay.

-

Reproducible binary format: unlike CSV,

.npzpreserves exact dtypes and shapes, ensuring identical results across different environments and containers.

- Each GCS object read or listing request incurs a small per-request

cost; using a single

.npzreduces both the number of API calls and associated latency.

PYTHON

import pandas as pd

import io

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler, LabelEncoder

# Load Titanic CSV (from local or GCS you've already downloaded to the notebook)

bucket = client.bucket(BUCKET_NAME)

blob = bucket.blob("titanic_train.csv")

df = pd.read_csv(io.BytesIO(blob.download_as_bytes()))

# Minimal preprocessing to numeric arrays

sex_enc = LabelEncoder().fit(df["Sex"]) # Fit label encoder on 'Sex' column (male/female)

df["Sex"] = sex_enc.transform(df["Sex"]) # Convert 'Sex' to numeric values (e.g., male=1, female=0)

df["Embarked"] = df["Embarked"].fillna("S") # Replace missing embarkation ports with most common ('S')

emb_enc = LabelEncoder().fit(df["Embarked"]) # Fit label encoder on 'Embarked' column (S/C/Q)

df["Embarked"] = emb_enc.transform(df["Embarked"]) # Convert embarkation categories to numeric codes

df["Age"] = df["Age"].fillna(df["Age"].median()) # Fill missing ages with median (robust to outliers)

df["Fare"] = df["Fare"].fillna(df["Fare"].median())# Fill missing fares with median to avoid NaNs

X = df[["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]].values # Select numeric feature columns as input

y = df["Survived"].values # Target variable (1=survived, 0=did not survive)

scaler = StandardScaler() # Initialize standard scaler for standardization (best practice for neural net training)

X = scaler.fit_transform(X) # Scale features to mean=0, std=1 for stable training

X_train, X_val, y_train, y_val = train_test_split( # Split dataset into training and validation sets

X, y, test_size=0.2, random_state=42) # 80% training, 20% validation (fixed random seed)

np.savez("/home/jupyter/train_data.npz", X_train=X_train, y_train=y_train) # Save training arrays to compressed .npz file

np.savez("/home/jupyter/val_data.npz", X_val=X_val, y_val=y_val) # Save validation arrays to compressed .npz fileWe can then upload the files to our GCS bucket.

PYTHON

# Upload to GCS

bucket.blob("data/train_data.npz").upload_from_filename("/home/jupyter/train_data.npz")

bucket.blob("data/val_data.npz").upload_from_filename("/home/jupyter/val_data.npz")

print("Uploaded: gs://%s/data/train_data.npz and val_data.npz" % BUCKET_NAME)To check our work (bucket contents), we can again use the following code:

Minimal PyTorch training script (train_nn.py) - local

test

Outside of this workshop, you should run these kinds of tests on your local laptop or lab PC when possible. We’re using the Workbench VM here only for convenience in this workshop setting, but this does incur a small fee for our running VM.

- For large datasets, use a small representative sample of the total dataset when testing locally (i.e., just to verify that code is working and model overfits nearly perfectly after training enough epochs)

- For larger models, use smaller model equivalents (e.g., 100M vs 7B params) when testing locally

Find this file in our repo:

Intro_GCP_for_ML/scripts/train_nn.py. It does three things:

1) loads .npz from local or GCS 2) trains a tiny multilayer

perceptron (MLP) 3) writes all outputs side‑by‑side (model + metrics +

eval history + training.log) to the same --model_out

folder.

To test this code, we can run the following:

PYTHON

# configure training hyperparameters to use in all model training runs downstream

MAX_EPOCHS = 500

LR = 0.001

PATIENCE = 50

# local training run

import time as t

start = t.time()

# Example: run your custom training script with args

!python /home/jupyter/Intro_GCP_for_ML/scripts/train_nn.py \

--train /home/jupyter/train_data.npz \

--val /home/jupyter/val_data.npz \

--epochs $MAX_EPOCHS \

--learning_rate $LR \

--patience $PATIENCE

print(f"Total local runtime: {t.time() - start:.2f} seconds")If applicable (numpy mismatch), run the below code after uncommenting

it (select code and type Ctrl+/ for multiline

uncommenting)

PYTHON

# # Fix numpy mismatch

# !pip install --upgrade --force-reinstall "numpy<2"

# # Then, rerun:

# import time as t

# start = t.time()

# # Example: run your custom training script with args

# !python /home/jupyter/Intro_GCP_for_ML/scripts/train_nn.py \

# --train /home/jupyter/train_data.npz \

# --val /home/jupyter/val_data.npz \

# --epochs $MAX_EPOCHS \

# --learning_rate $LR \

# --patience $PATIENCE

# print(f"Total local runtime: {t.time() - start:.2f} seconds")Reproducibility test

Without reproducibility, it’s impossible to gain reliable insights into the efficacy of our methods. An essential component of applied ML/AI is ensuring our experiments are reproducible. Let’s first rerun the same code we did above to verify we get the same result.

- Take a look near the top of

Intro_GCP_for_ML/scripts/train_nn.pywhere we are setting multiple numpy and torch seeds to ensure reproducibility.

PYTHON

import time as t

start = t.time()

# Example: run your custom training script with args

!python /home/jupyter/Intro_GCP_for_ML/scripts/train_nn.py \

--train /home/jupyter/train_data.npz \

--val /home/jupyter/val_data.npz \

--epochs $MAX_EPOCHS \

--learning_rate $LR \

--patience $PATIENCE

print(f"Total local runtime: {t.time() - start:.2f} seconds")Please don’t use cloud resources for code that is not reproducible!

Evaluate the locally trained model on the validation data

PYTHON

import sys, torch, numpy as np

sys.path.append("/home/jupyter/Intro_GCP_for_ML/scripts")

from train_nn import TitanicNet

# load validation data

d = np.load("/home/jupyter/val_data.npz")

X_val, y_val = d["X_val"], d["y_val"]

# tensors

X_val_t = torch.tensor(X_val, dtype=torch.float32)

y_val_t = torch.tensor(y_val, dtype=torch.long)

# rebuild model and load weights

m = TitanicNet()

state = torch.load("/home/jupyter/model.pt", map_location="cpu")

m.load_state_dict(state)

m.eval()

with torch.no_grad():

probs = m(X_val_t).squeeze(1) # [N], sigmoid outputs in (0,1)

preds_t = (probs >= 0.5).long() # [N] int64

correct = (preds_t == y_val_t).sum().item()

acc = correct / y_val_t.shape[0]

print(f"Local model val accuracy: {acc:.4f}")We should see an accuracy that matches our best epoch in the local training run. Note that in our setup, early stopping is based on validation loss; not accuracy.

Launch the training job

In the previous episode, we trained an XGBoost model using Vertex AI’s CustomTrainingJob interface. Here, we’ll do the same for a PyTorch neural network. The structure is nearly identical — we define a training script, select a prebuilt container (CPU or GPU), and specify where to write all outputs in Google Cloud Storage (GCS). The main difference is that PyTorch requires us to save our own model weights and metrics inside the script rather than relying on Vertex to package a model automatically.

Set training job configuration vars

For our image, we can find the corresponding PyTorch image by visiting: cloud.google.com/vertex-ai/docs/training/pre-built-containers#pytorch

PYTHON

import datetime as dt

RUN_ID = dt.datetime.now().strftime("%Y%m%d-%H%M%S")

ARTIFACT_DIR = f"gs://{BUCKET_NAME}/artifacts/pytorch/{RUN_ID}"

IMAGE = 'us-docker.pkg.dev/vertex-ai/training/pytorch-xla.2-4.py310:latest' # cpu-only version

MACHINE = "n1-standard-4" # CPU fine for small datasets

print(f"RUN_ID = {RUN_ID}\nARTIFACT_DIR = {ARTIFACT_DIR}\nMACHINE = {MACHINE}")Init the training job with configurations

PYTHON

# init job (this does not consume any resources)

DISPLAY_NAME = f"{LAST_NAME}_pytorch_nn_{RUN_ID}"

print(DISPLAY_NAME)

# init the job. This does not consume resources until we run job.run()

job = aiplatform.CustomTrainingJob(

display_name=DISPLAY_NAME,

script_path="Intro_GCP_for_ML/scripts/train_nn.py",

container_uri=IMAGE)Run the job, paying for our MACHINE on-demand.

PYTHON

job.run(

args=[

f"--train=gs://{BUCKET_NAME}/data/train_data.npz",

f"--val=gs://{BUCKET_NAME}/data/val_data.npz",

f"--epochs={MAX_EPOCHS}",

f"--learning_rate={LR}",

f"--patience={PATIENCE}",

],

replica_count=1,

machine_type=MACHINE,

base_output_dir=ARTIFACT_DIR, # sets AIP_MODEL_DIR used by your script

sync=True,

)

print("Artifacts folder:", ARTIFACT_DIR)Monitoring training jobs in the Console

- Go to the Google Cloud Console.

- Navigate to Vertex AI > Training > Custom

Jobs.

- Click on your job name to see status, logs, and output model

artifacts.

- Cancel jobs from the console if needed (be careful not to stop jobs you don’t own in shared projects).

Quick link: https://console.cloud.google.com/vertex-ai/training/training-pipelines?hl=en&project=doit-rci-mlm25-4626

Check our bucket contents to verify expected outputs are there.

PYTHON

total_size_bytes = 0

# bucket = client.bucket(BUCKET_NAME)

for blob in client.list_blobs(BUCKET_NAME):

total_size_bytes += blob.size

print(blob.name)

total_size_mb = total_size_bytes / (1024**2)

print(f"Total size of bucket '{BUCKET_NAME}': {total_size_mb:.2f} MB")What you’ll see in

gs://…/artifacts/pytorch/<RUN_ID>/:

-

model.pt— PyTorch weights (state_dict). -

metrics.json— final val loss, hyperparameters, dataset sizes, device, model URI. -

eval_history.csv— per‑epoch validation loss (for plots/regression checks). -

training.log— complete stdout/stderr for reproducibility and debugging.

Evaluate the Vertex-trained model on the validation data

We can check out work to see if this model gives the same result as our “locally” trained model above.

To follow best practices, we will simply load this model into memory from GCS.

PYTHON

import sys, torch, numpy as np

sys.path.append("/home/jupyter/Intro_GCP_for_ML/scripts")

from train_nn import TitanicNet

# -----------------

# download model.pt straight into memory and load weights

# -----------------

ARTIFACT_PREFIX = f"artifacts/pytorch/{RUN_ID}/model"

MODEL_PATH = f"{ARTIFACT_PREFIX}/model.pt"

model_blob = bucket.blob(MODEL_PATH)

model_bytes = model_blob.download_as_bytes()

# load from bytes

model_pt = io.BytesIO(model_bytes)

# rebuild model and load weights

state = torch.load(model_pt, map_location="cpu")

m = TitanicNet()

m.load_state_dict(state)

m.eval(); # set model to eval mode

# -----------------

# ALT: download copy of model into VM (costs extra storage)

# -----------------

# # Copy model.pt from GCS (replace RUN_ID with your run folder)

# !gsutil cp {ARTIFACT_DIR}/model/model.pt /home/jupyter/model_vertex.pt

# !ls

# # rebuild model and load weights

# m = TitanicNet()

# state = torch.load("/home/jupyter/model_vertex.pt", map_location="cpu")

# m.load_state_dict(state)

# m.eval()As before, we can run our model evaluation code with this model.

To follow best practices, we will read our validation data from GCS and avoid having a copy in our VM.

PYTHON

# read validation data into memory

VAL_PATH = "data/val_data.npz"

val_blob = bucket.blob(VAL_PATH)

val_bytes = val_blob.download_as_bytes()

d = np.load(io.BytesIO(val_bytes))

X_val, y_val = d["X_val"], d["y_val"]

X_val_t = torch.tensor(X_val, dtype=torch.float32)

# get predictions

with torch.no_grad():

probs = m(X_val_t).squeeze(1) # [N], sigmoid outputs in (0,1)

preds_t = (probs >= 0.5).long() # threshold at 0.5 -> class label 0/1

correct = (preds_t == y_val_t).sum().item()

acc = correct / y_val_t.shape[0]

print(f"Vertex model val accuracy: {acc:.4f}")GPU-Accelerated Training on Vertex AI

In the previous example, we ran our PyTorch training job on a

CPU-only machine using the pytorch-cpu container. That

setup works well for small models or quick tests since CPU instances are

cheaper and start faster.

In this section, we’ll attach a GPU to our Vertex AI training job to speed up heavier workloads. The workflow is nearly identical to the CPU version, except for a few changes: